Chinese Company Huawei Tested AI That Can Recognize Uighurs and Alert Police

Picture this alternative reality: In 2003, as the Iraq War wages and we’re just two years removed from 9/11, Microsoft develops facial recognition technology that can pinpoint individuals in crowds from ethnic subgroups known to be capable of terrorism.

Our country is at war. We just lost nearly 3,000 people in the single most destructive terror attack in modern history. We were still in the grip, however irrationally, of the fear that another 9/11 was just around the corner. With some breakthrough software deployed at our airports, all courtesy of our biggest tech company, our lives just became a lot safer. A cause for celebration, right?

Of course not. All but the most strident security hawks would scream discrimination. Our civil rights were being trampled, minorities were being singled out — and for what? Is there any evidence this would have stopped a terror attack? It’s beyond the pale to even consider.

Huawei, a Chinese company that is the world’s biggest producer of telecommunications equipment, is doing this exact hypothetical. Not only that, the company is doing it in a country where the minority it is identifying — ethnic Uighurs, Turkic people, generally Muslim, who hail from Xinjiang province — can be put into re-education camps simply for being who they are.

And yet, this didn’t exactly set off alarm bells across the world.

The first sign something was up came Dec. 8 from The Washington Post, which reported that “Huawei has tested facial recognition software that could send automated ‘Uighur alarms’ to government authorities when its camera systems identify members of the oppressed minority group, according to an internal document that provides further details about China’s artificial-intelligence surveillance regime.

“A document signed by Huawei representatives — discovered by the research organization IPVM and shared exclusively with The Washington Post — shows that the telecommunications firm worked in 2018 with the facial recognition start-up Megvii to test an artificial-intelligence camera system that could scan faces in a crowd and estimate each person’s age, sex and ethnicity.”

That then would be sent to authorities and could potentially flag them for police surveillance and put them at risk for detention.

And this wasn’t a matter of months of investigative reporting — which isn’t to say The Post didn’t do a bang-up job. However, it’s worth noting that a document detailing all of this could be found on Huawei’s website.

[firefly_poll]

For whatever it’s worth, the document was removed after the companies were contacted about it.

Glenn Schloss, a Huawei spokesman, told The Post that the program “is simply a test and it has not seen real-world application. Huawei only supplies general-purpose products for this kind of testing. We do not provide custom algorithms or applications.”

That’s not quite what China said about targeting Uighurs.

According to The Post, “Chinese officials have said such systems reflect the country’s technological advancement, and that their expanded use can help government responders and keep people safe. But to international rights advocates, they are a sign of China’s dream of social control — a way to identify unfavorable members of society and squash public dissent.”

The Post said China’s foreign ministry did not immediately respond to requests for comment.

If the whole thing sounds scary, Maya Wang, of the advocacy group Human Rights Watch, says it goes even further than that.

“China’s surveillance ambition goes way, way, way beyond minority persecution,” Wang told The Post, but “the persecution of minorities is obviously not exclusive to China. … And these systems would lend themselves quite well to countries that want to criminalize minorities.”

And make no mistake: This is hardly just proof of concept stuff.

“People don’t go to the trouble of building expensive systems like this for nothing,” said Jonathan Frankle, a researcher in the Computer Science and Artificial Intelligence Lab at the Massachusetts Institute of Technology. “These aren’t people burning money for fun. If they did this, they did it for a very specific reason in mind. And that reason is very clear.”

Meanwhile, a Sunday follow-up in the Post found this was hardly the only tech product Huawei had used to detect a person’s race.

You would think this passage would elicit rage in the United States, and yet it didn’t: “Protests on the scale of Black Lives Matter would be nearly impossible to organize in mainland China — partly because of these very surveillance technologies.

“One of the products jointly offered by Huawei and Chinese surveillance equipment supplier Vikor can send an alert if a crowd starts to form, according to a marketing presentation. The alert can be set for clusters of three, six, 10, 20 or 50 people.”

Also not worrisome: “In the United States, police have also sought to use some technologies, such as facial recognition, to investigate crimes but have stopped short of publicly adopting technologies to analyze people’s voices and ethnicities. In the wake of nationwide protests over the summer against police abuse, Microsoft, Amazon and IBM banned police from using their facial recognition technology. (Amazon founder and chief executive Jeff Bezos owns The Post.)”

And no one really cares — with some exceptions.

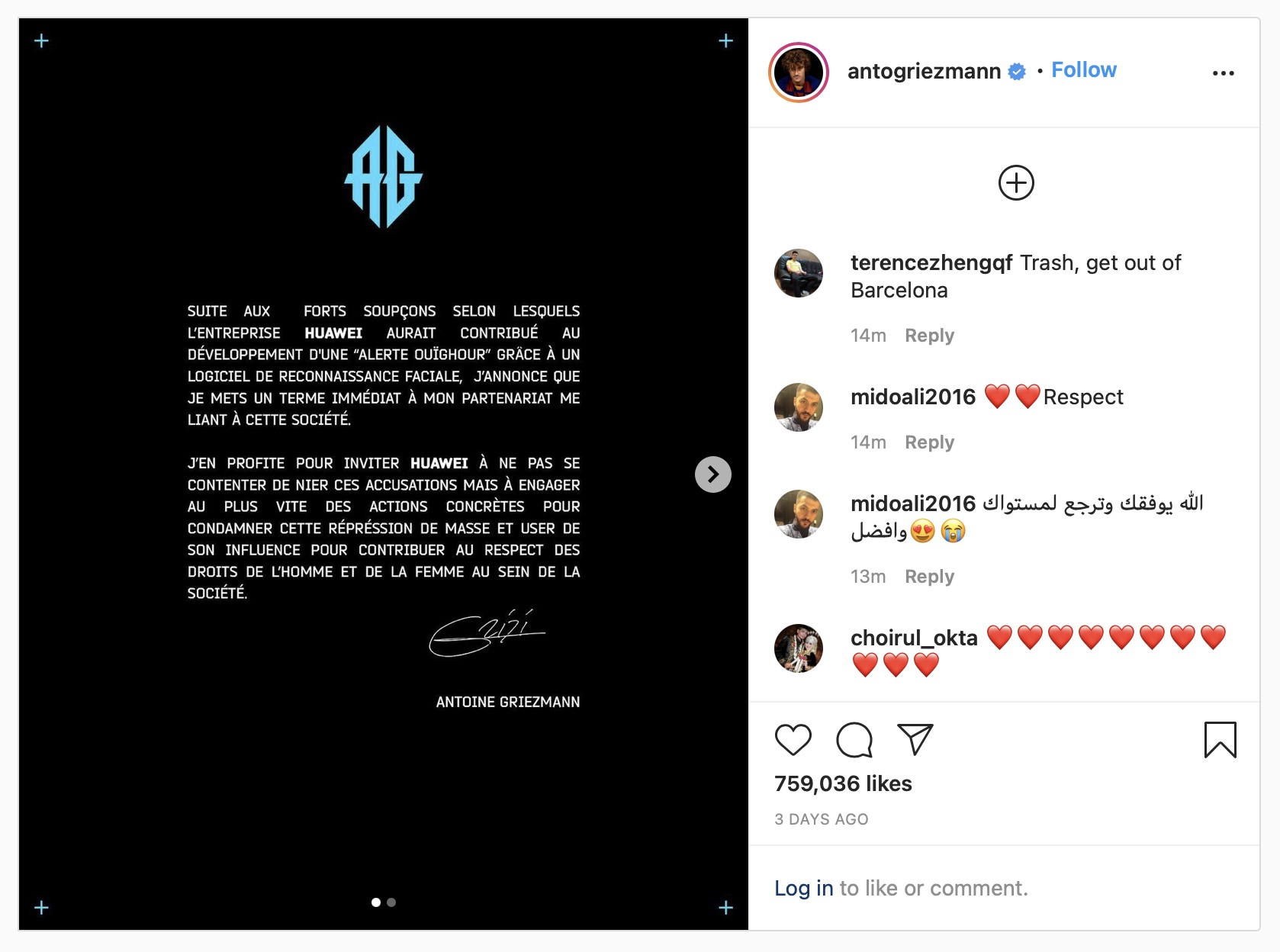

French soccer player Antoine Griezmann, who has been a Huawei brand ambassador since 2017, announced Dec. 10 that he would end his relationship with the company.

“I take this opportunity to invite Huawei to not just deny these accusations,” Griezmann said in an Instagram post, “but to take concrete actions as quickly as possible to condemn this mass repression, and to use its influence to contribute to the respect of human and women’s rights in society.”

He’s one of the few pushing Huawei or China, however. What a surprise.

Meanwhile, let this be any American company and the hue and cry would be deafening. Again, what a surprise.

What we need is a presumptive president-elect who will stand up to Huawei and to Beijing. What’s clear is that we don’t have one.

This article appeared originally on The Western Journal.